Ammeter to Voltmeter...who does it?

A higher output alternator will not increase the load on anything. It's the equipement that creates the load. The ignition, headlights, wipers, are only going to draw what they need. Or more precisely, the current flow through them will be limited by the internal resistance and the voltage across them. The resistance is fixed and the voltage is regulated.

Lets take a pair of headlights, nominally rated 60/55 Watts. At 12 Volts, 9 amps will pass through them. With the alternator running and regulated to 14 Volts, it could be 11 amps. Only 11 amps will flow through those wires regardless of the capacity of the alternator.

There is one item that doesn't have a fixed resistance, the battery. If the battery is low, it will suck more current at any given voltage. So this is the one circuit a higher output alternator potentially could fry connectors, and even the fusible link or at least weaken it.

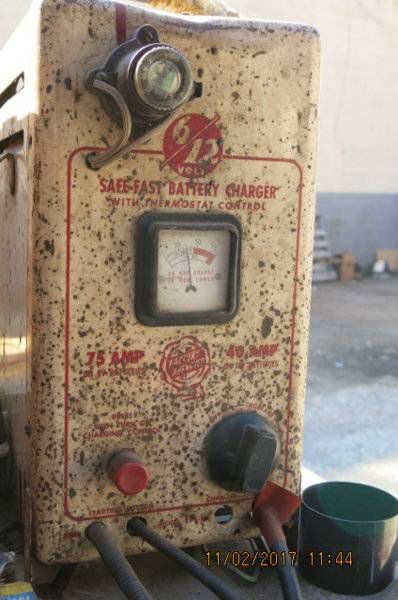

Here's a low battery in my wagoneer, using a charger with manual regulation. Initially it draws over 30 amps at 14.2 Volts.

Well I don't want that because that makes a hot battery which in turn makes it harder to accept a charge. It also can cook off the acid - very bad in an AGM battery.

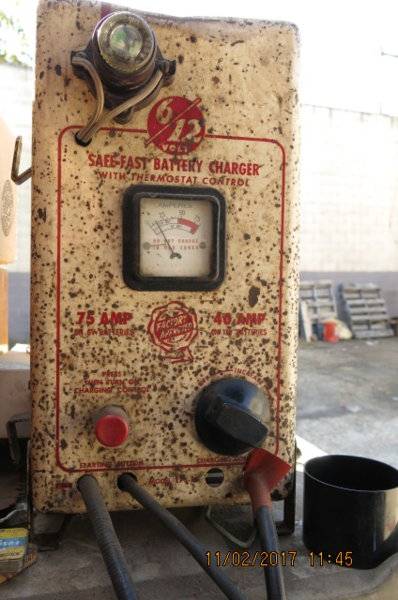

So I reduce the voltage and current.

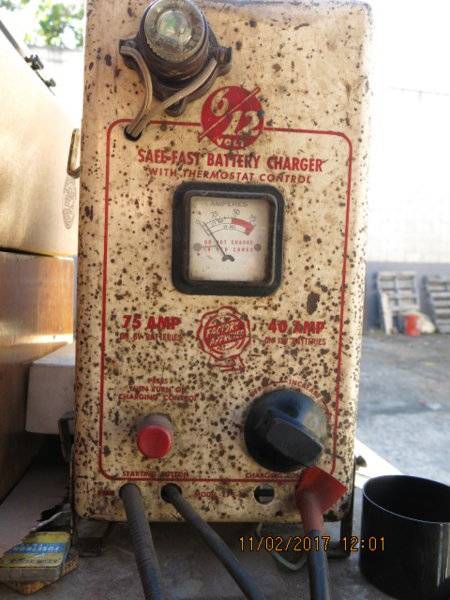

But 15 minutes later, its charging at less than 5 amps even though the voltage is again reset for 14.5 V

With an alternator and the voltage regulated some other strategies need to be used. That's why I asked about the regulation of the coach battery charging and was speculating about the battery to battery connector with the smaller section wire.

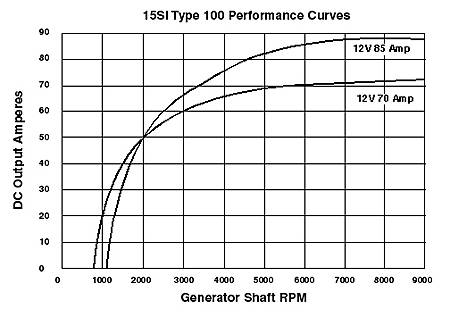

The maximum power an alternator can provide varies with rpm. They can be pretty poor performers at low rpm but quickly do better as rpms increase. When testing for maximum power, the regulated voltage also makes a difference. So the power ratings are a bit of game. It seems that most companies give alternator their ratings based on something close to maximum rpm and its a crapshoot as to what voltage.

(rpm is alternator rpm)

Another real life example. A couple years ago I left the parking lights on after driving in a patchy fog. After work, I got a jump start. The ammeter showed roughly 10 amps charging. As soon as I started driving, the charge rate went up. Doing over 35 mph it was a bit over 30 amps. Way too much for my comfort on a 20 - 30 minute drive. So I put into N and let it idle on all downslopes, drove closer to 25 mph, and turned on the headlights to divert a portion of the current. My headlights are on relays, so this also reduced current through the alternator wire and connections into the car. All these tricks worked because the alternator's power was maxed out at lower rpms. When I got back to our base camp, the battery was hot. We didn't have a charger.:rolleyes: The next morning, it was cooled off, started it and basically used the same techniques. Having had a chance to cool and get somewhat recharged, after about a half hour it was only drawing a few amps regardless of speed I was driving. :)

Point here is not that a lower rated alternator is prefered. The above is an unusual situation. Rather this is an illustration of how the alternator output and battery charging relate.

In fact, under normal conditions, it is most desirable for an alternator to have enough power at idle speed to run everything at 14 Volts.

If it can't, then the battery must provide some. Then as rpms rise, the alternator has to recharge the battery. If this happens for hours on end, like stuck in traffic with A/C and lights on in 90 degree weather, the battery gets more and more drained, and when the car finally does move, the charging rate is high. The result is constant load, some of it high, on the fusible link, connectors etc in the charging circuit.

In the 60s, this was probably not in the design scenario. Rather the only big drain would be starting, and then after starting the engine would be on fast idle, easily taking care of the normal recharge from a cold start.

I suppose its possible your camper's design took advantage of the alternator's lower output at idle speed to somewhat recharge the battery, but would need to find somebody who really knew. It seems kindof crude and uncertain, as an owner might start it up and then go hit the freeway.

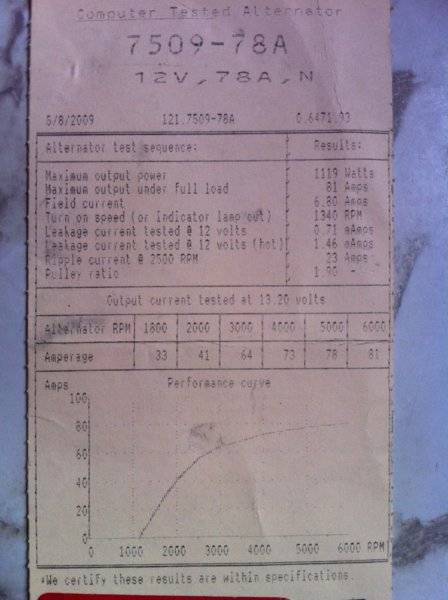

One more item. :) While higher alternator rating is often better, sometimes this is achieved at the expense of low rpm performance. Example from the Remy-Delco catalog for a GM alternator, sorry generator. LOL. GM never stopped calling them generators.

I can't answer the questions about what came on it, and the differences.

A higher output alternator will not increase the load on anything. It's the equipement that creates the load. The ignition, headlights, wipers, are only going to draw what they need. Or more precisely, the current flow through them will be limited by the internal resistance and the voltage across them. The resistance is fixed and the voltage is regulated.

Lets take a pair of headlights, nominally rated 60/55 Watts. At 12 Volts, 9 amps will pass through them. With the alternator running and regulated to 14 Volts, it could be 11 amps. Only 11 amps will flow through those wires regardless of the capacity of the alternator.

There is one item that doesn't have a fixed resistance, the battery. If the battery is low, it will suck more current at any given voltage. So this is the one circuit a higher output alternator potentially could fry connectors, and even the fusible link or at least weaken it.

Here's a low battery in my wagoneer, using a charger with manual regulation. Initially it draws over 30 amps at 14.2 Volts.

Well I don't want that because that makes a hot battery which in turn makes it harder to accept a charge. It also can cook off the acid - very bad in an AGM battery.

So I reduce the voltage and current.

But 15 minutes later, its charging at less than 5 amps even though the voltage is again reset for 14.5 V

With an alternator and the voltage regulated some other strategies need to be used. That's why I asked about the regulation of the coach battery charging and was speculating about the battery to battery connector with the smaller section wire.

The maximum power an alternator can provide varies with rpm. They can be pretty poor performers at low rpm but quickly do better as rpms increase. When testing for maximum power, the regulated voltage also makes a difference. So the power ratings are a bit of game. It seems that most companies give alternator their ratings based on something close to maximum rpm and its a crapshoot as to what voltage.

(rpm is alternator rpm)

Another real life example. A couple years ago I left the parking lights on after driving in a patchy fog. After work, I got a jump start. The ammeter showed roughly 10 amps charging. As soon as I started driving, the charge rate went up. Doing over 35 mph it was a bit over 30 amps. Way too much for my comfort on a 20 - 30 minute drive. So I put into N and let it idle on all downslopes, drove closer to 25 mph, and turned on the headlights to divert a portion of the current. My headlights are on relays, so this also reduced current through the alternator wire and connections into the car. All these tricks worked because the alternator's power was maxed out at lower rpms. When I got back to our base camp, the battery was hot. We didn't have a charger.:rolleyes: The next morning, it was cooled off, started it and basically used the same techniques. Having had a chance to cool and get somewhat recharged, after about a half hour it was only drawing a few amps regardless of speed I was driving. :)

Point here is not that a lower rated alternator is prefered. The above is an unusual situation. Rather this is an illustration of how the alternator output and battery charging relate.

In fact, under normal conditions, it is most desirable for an alternator to have enough power at idle speed to run everything at 14 Volts.

If it can't, then the battery must provide some. Then as rpms rise, the alternator has to recharge the battery. If this happens for hours on end, like stuck in traffic with A/C and lights on in 90 degree weather, the battery gets more and more drained, and when the car finally does move, the charging rate is high. The result is constant load, some of it high, on the fusible link, connectors etc in the charging circuit.

In the 60s, this was probably not in the design scenario. Rather the only big drain would be starting, and then after starting the engine would be on fast idle, easily taking care of the normal recharge from a cold start.

I suppose its possible your camper's design took advantage of the alternator's lower output at idle speed to somewhat recharge the battery, but would need to find somebody who really knew. It seems kindof crude and uncertain, as an owner might start it up and then go hit the freeway.

One more item. :) While higher alternator rating is often better, sometimes this is achieved at the expense of low rpm performance. Example from the Remy-Delco catalog for a GM alternator, sorry generator. LOL. GM never stopped calling them generators.